Is chatgpt smoking on the bolded section or is that really a thing?

|

AI is testing people is stupid enough to believe it. Since a lot of people treat wikipedia (the real one not poe wiki) as biblical source, AI is winning again

WTF on crafting and then wipe the item again with fossil fossil ignore metamod This is the start of forum signature: I am not a GGG employee. About the username: Did you know Kowloon Gundam is made in Neo Hong Kong?

quote from the first page: "Please post one thread per issue, and check the forum for similar posts first" This is the end of forum signature |

|

|

Machine Learning can indeed be used for the most obscure of domains !

You might just have used the wrong AI for your question ? :p

Spoiler

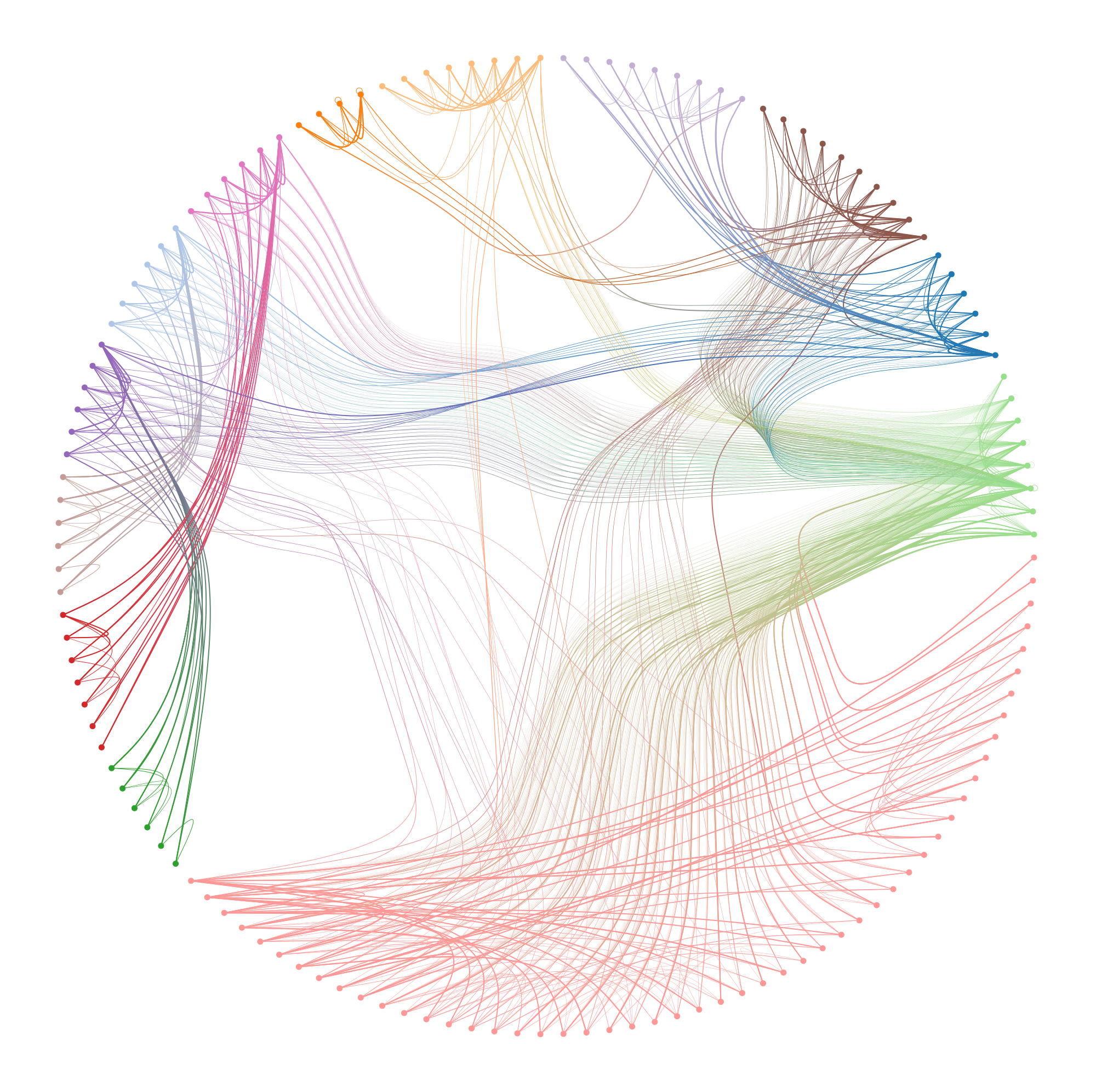

Visualisation of the model "how2craft boneshatter ax?!1"

|

|

" no, for it to lie it needs to understand what truth even is and then not giving it to you. AI hallucinates, it just prints words and thanks to attention its very good in regards of sounding believable because this is what is trained for. AI is not a description of functionality but a marketing term so to see ther hate here is also quite funny. Current Build: Penance Brand

God build?! https://pobb.in/bO32dZtLjji5 |

|

|

The technical term for language models is "bullshit", "hallucinate" is for the visual neural networks.

|

|

" No, not even close: https://en.wikipedia.org/wiki/Hallucination_(artificial_intelligence) You can also browse teh original sources at the end if you want to read the papers. Bullshitting is just a recent definition and has nothing to do with detinction between diffusion/GAN/Autoencoder models and LLMs. Some scholars are pushing for the term, because they think it better describes whats happening. Strangely their papers taste a little salty too ;) Current Build: Penance Brand

God build?! https://pobb.in/bO32dZtLjji5 |

|

" You talk enought with it and start doing fact check and it eventually surfaces that when it is sure you know the answer it wont lie but if your nor sure then it will notice it, it will tell you something probable but not actual. | |

" No, it just chooses the next word. There is no intent. Not even the intent to answer your query. That's just the emergent property. Blame faulty reasoning in its training data (in case of poe crafting we are most likely talking about a mix of reddit, maxroll and others as well as wikis(yeah even the fandom one)) but how would a LLM differ between old info and new? how between claims of users and actual guides? Here is the truth, it doesn't and it can't. So nope, it will not lie, it will simply tell you the next word with the highest probability. If you're actually interested how this all works, here is some entry level reading as an online book: https://www.bishopbook.com Of course knowledge about the math involved should be brought (bayesian probability, linear algebra, integrals and differentials etc) After that look up how attention works and you will see. Current Build: Penance Brand God build?! https://pobb.in/bO32dZtLjji5 Last edited by tsunamikun on Sep 23, 2024, 6:13:54 AM

|

|

|

Thanks for the references.

" Can't it ? Is there really nothing like a trustworthiness function (trust New York Times archives more than 4chan archives) in todays most sophisticated models ? Or perhaps even easier, an «obsoletion» function where sources closer in time to the event asked about are trusted more than the ones more distant ? Last edited by BlueTemplar85 on Sep 23, 2024, 6:35:56 AM

|

|

" + going forward companies that create AIs and website makers could agree to include query points for websites that provide meta data such as timestamps, so that changes / actuality becomes visible to AI. Still, the issue with misinformation impacting AI training data is there. Hell, there's AI creating fake news articles with faulty information that is then fed back into the learning process. It was always either gonna be the best or worst thing to happen I guess.. The opposite of knowledge is not illiteracy, but the illusion of knowledge.

|

|

" Per definition Analysis of random chunks of internet falls under unsupervised learning. Of course you use supervised learning (and we do!) but then you need a lot of people tagging your data. For an LLM to understand that something is obsolete it needs to be tagged as such. So you can either use some kind of universal information meta data design (lol good luck world!) or you hire cheap labor (looking at you africa...) to do the work for you. Unfortunately you won't have actual professionals with domain knowledge(and POE actually needs a lot of that) but just standard people doing your tagging if you're actually rich enough to do this. Concerning obsolete timestamps. Some things are unchanging and when newly introduced, information and discussion about this topic is at its peak. Meaning the amount of data in that timeframe is the highest. In many cases (lets take penance brand of dissipation) almost all information will come from that timeframe (affliction) as the relevance of the topic has mostly disappeared. Here timestamps would be useful, but lets take a look about... lets say mathematical proofs. Here the most work and discussion also happens at the introduction, but the "truth" stays the same over time, even if the proof is used for other niches. here a query would maybe want the original content. And that is the problem, LLMs could have transformers for timeframes but its not always useful the use them per default. Especially as "the changes over time" is an emergent property of data itself. So this kind of things can be done query engineering ;) But I'm actually not a data scientist, those weirdos do have a lot of tricks they are currently working on, so maybe it will change soon (hopefully for the better) Current Build: Penance Brand

God build?! https://pobb.in/bO32dZtLjji5 |

|